Forecast Sparse Data Better: TiRex vs Traditional Models - Practical Tutorial

An xLSTM based foundation model for zero-shot forecasting

If you follow recent research, you’ve likely seen headlines about xLSTM.

xLSTM[1] is a modern upgrade to the classic LSTM architecture, with strong results in computer vision[2], image segmentation[3], and related tasks.

The research team behind it pushed further — testing xLSTM for zero-shot time-series forecasting. The result is TiRex[4], a foundation model built for time-series tasks. Key characteristics of TiRex:

Pretrained on diverse data: Chronos, GIFT-Eval, and synthetic datasets.

Compact: Contains just 35M parameters, far smaller than models like Chronos-Bolt-Base (200M), Toto (151M), and TimesFM-2.0 (500M).

Superior Performance: Currently ranks #1 on the GIFT-Eval benchmark, surpassing Toto within a month. The competition is tight — a sign of rapid progress in this space!

Probabilistic forecasting: Predicts 9 quantiles, enabling decision-aware forecasting.

While testing TiRex, I found it performs unexpectedly well on intermittent data — a challenging area for many models.

In this article, we’ll go through 2 tutorials:

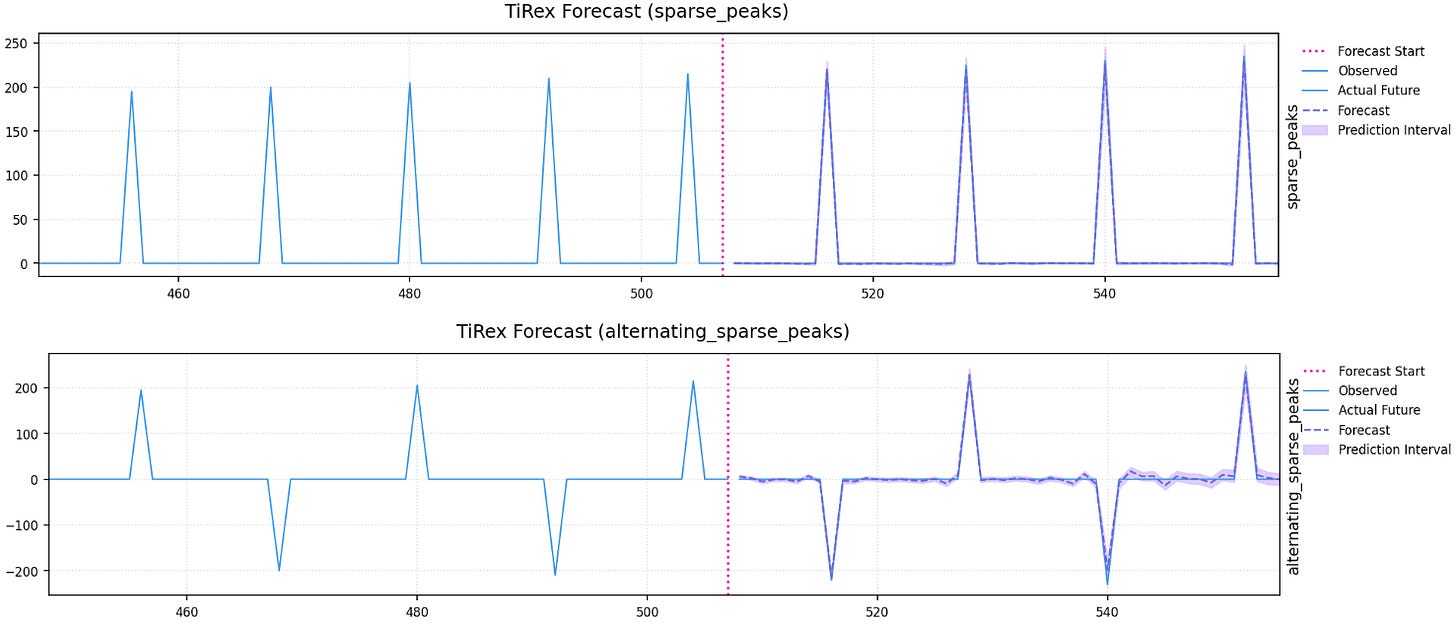

Compare TiRex with the recent foundation model Timer-XL on synthetic sparse data.

Benchmark TiRex against statistical models (IMAPA, Croston, etc.) on 2 BOOM datasets — featuring sparse and irregularly sampled series. As usual, we apply rigorous rolling origin evaluation on the full test set.

Let’s get started!

🎁 Subscribe to AI Horizon Forecast by next week to receive a -20% discount. You'll gain access to my AI Capstone Projects, including this fascinating project on using TiRex!

Part 1 - TiRex vs Timer-XL on Synthetic Sparse Data

In this section, we benchmark 2 models on a simple sparse-data task — part of the same setup I used when testing DeepSeek.

The goal is to assess whether foundation models can handle intermittent data, where only a few specialized models perform well without manual tuning.