MOIRAI: Zero-Shot Forecasting Without Training - Complete Tutorial

A powerful foundation time-series model by Salesforce

How can a model forecast time series, without training?

At first, it seems counter-intuitive.

However, the recent foundation models have integrated many techniques from the LLM literature. Most importantly, scaling laws seem to apply to time series as well. Given more data, training time, and parameter size, these models perform better.

We discussed the ‘secret sauce’ of these models in our previous article.

This article will briefly discuss MOIRAI and build an end-to-end project on Energy Demand Forecasting. Specifically, we will cover:

A brief description of how MOIRAI works.

How to prepare the data for MOIRAI (with external covariates).

How to use MOIRAI correctly for optimal performance.

Finally, we’ll use MOIRAI to outperform some popular statistical models!

Let’s get started!

🎁 Subscribe to AI Horizon Forecast by next week to receive a -20% discount. You'll gain access to my AI Capstone Projects, including this interesting project on using MOIRAI!

Enter MOIRAI

What differentiates MOIRAI from other foundation models is its ability to incorporate external covariates — both past observed and future known variables.

In reality, TimesFM and TTM also accept external covariates, but their extended versions are expected to be released in the future (based on discussions with the respective authors).

Key features of MOIRAI include:

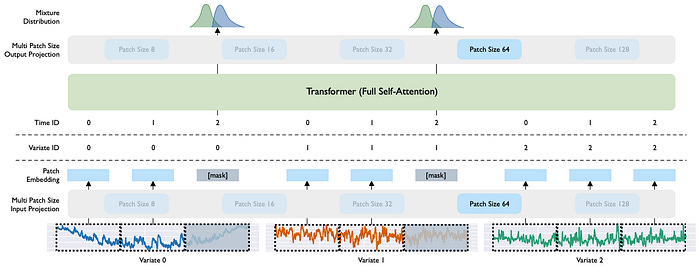

Multi-Patch Layers: MOIRAI adapts to multiple frequencies by learning a different patch size for each frequency.

Any-variate Attention: An elegant attention mechanism that respects permutation variance between each variate, and captures the temporal dynamics between datapoints.

Mixture of parametric distributions: MOIRAI optimizes for learning a mixture of distributions — rather than hypothesizing a single one.

Compared to TimesFM, MOIRAI introduces many novel features for time series. It modifies the traditional attention mechanism (Any-variate Attention) and considers different time-series frequencies.

However, the effectiveness of MOIRAI (and every foundation model) lies in the pretraining dataset. MOIRAI was pretrained on LOTSA, a massive collection of 27 billion observations across nine domains.

This extensive dataset, combined with the model’s innovative architecture, makes MOIRAI ideal as a zero-shot forecaster — able to predict unseen data quickly and accurately.

MOIRAI Tutorial

Find the full tutorial on the AI Projects Folder.

In this article, we’ll build a capstone project on MOIRAI using the Electricity dataset (ElectricityLoadDiagrams20112014 from UCI).