TabPFN-TS: A Surprising New Breakthrough in Time-Series Forecasting

Pretrained on tabular data, yet ranked #1 on TS Gift-Eval - tutorial included!

Tabular data remains a complex challenge in Deep Learning.

TabPFN[1] (based on Prior-Data Fitted Networks) is a DL model for tabular data. Its latest version, TabPFN-v2[2], has been repurposed as TabPFN-TS[3] for time-series forecasting.

The model is open-source and shows strong potential—its research team recently secured €9 million in funding from investors like Hugging Face.

TabPFN-v2 made headlines as a model pretrained entirely on tabular synthetic data yet seamlessly repurposed for time-series forecasting. It outperformed other foundation models and ranked first on the GIFT-Eval benchmark!

Key characteristics of TabPFN-TS:

Foundation Model: Trained on diverse synthetic tabular datasets, enabling strong generalization to unseen data.

In-Context Learning: Adapts dynamically in a single forward pass based on dataset structure and relationships.

Efficiency: Just 11M parameters—outperforming much larger models like Chronos-Large (710M parameters).

Simplicity: It uses only basic calendar features, avoiding complex lagged or rolling transformations. Supports exogenous variables.

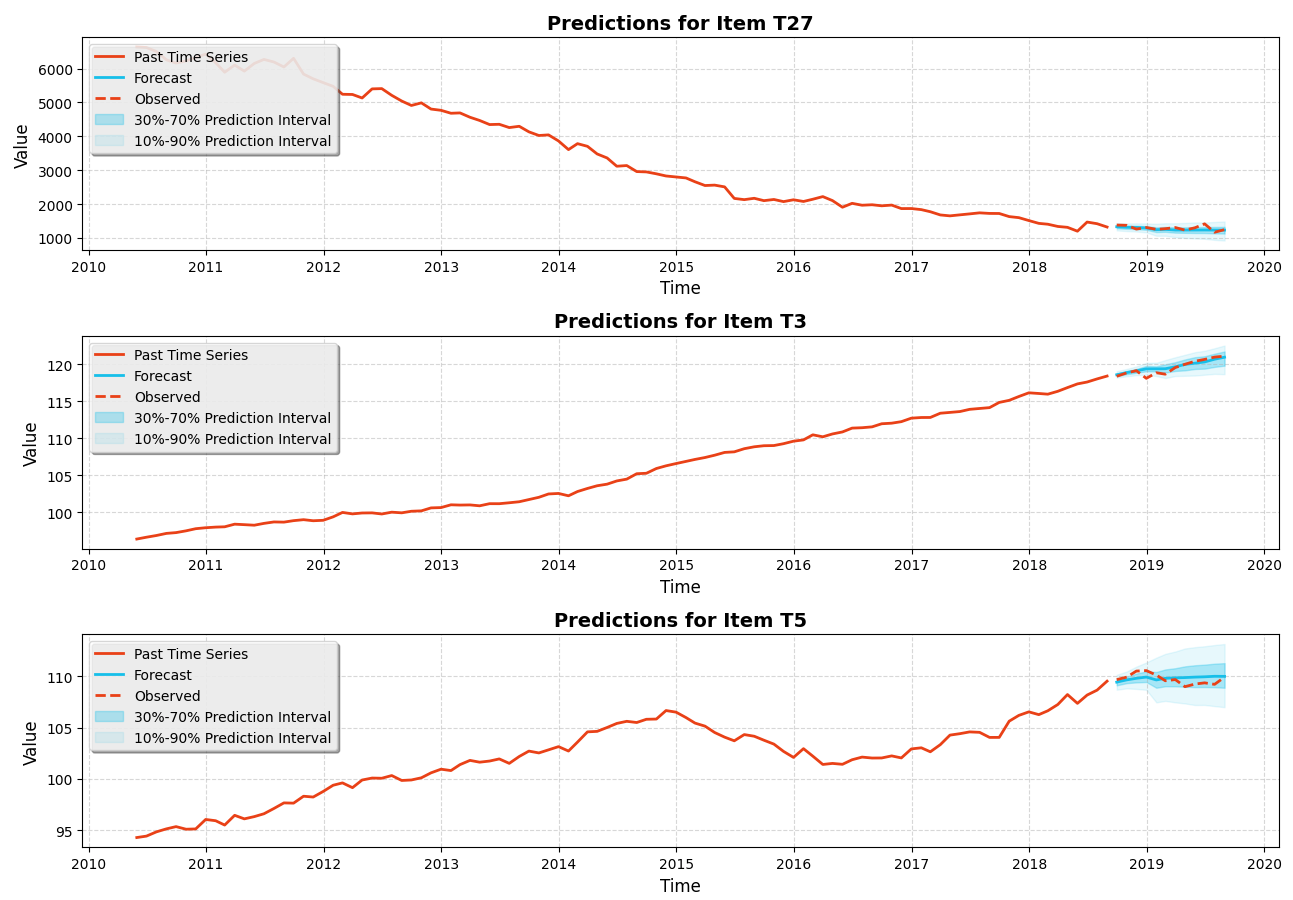

This article demonstrates how to use TabPFN-TS and includes a minimal benchmark comparing it with other models.

Let’s get started!

✅ Find the TabPFN-TS notebook in the AI Projects folder (Project 14)

✅ 85% of my paid subscribers have stayed for over a year since I launched. If you're in it for the long run, consider switching to an annual subscription—you'll save 23%!

Download the Dataset

Let’s start by downloading our data.