TimesFM-2.5: Hands-On Tutorial with Google's Upgraded Forecasting Model

Google's foundation forecasting model received its 3rd upgrade!

Looks like the end of summer reignited pretrained time-series research!

Last week, we explored MOIRAI-2, Salesforce’s model that hit #1 on GIFT-Eval.

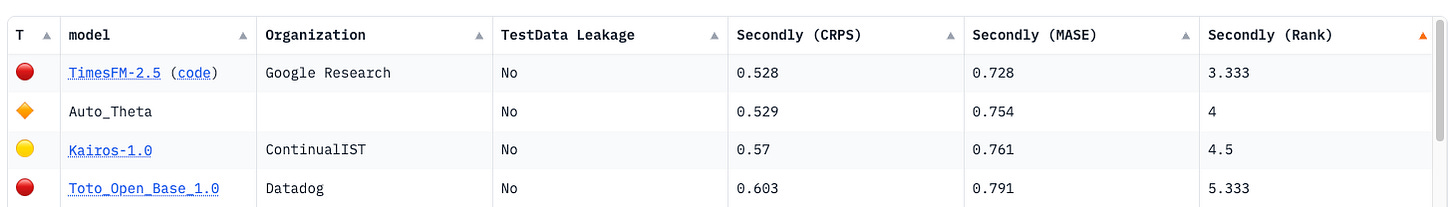

Now Google is back with TimesFM-2.5, the third upgrade in the series. It smashed benchmarks again, ranking #1 among open-source models on GIFT-Eval.

Even better, TimesFM-2.5 became the first foundation model to beat AutoTheta on second-level frequency, a long-standing weak spot for FM models:

In this article,

We’ll take TimesFM-2.5 for a spin and compare it with top statistical models, such as AutoARIMA and AutoETS.

We’ll use rolling-origin forecasts across 10 validation windows for a fair, rigorous test.

Let’s get started!

✅ Find the notebook for this article here: AI Projects Folder (Project 24)

Enter TimesFM-2.5

Google Research hasn’t published a paper on TimesFM-2.5 yet.

Hence, I compiled all available details from the model’s GitHub repo and Hugging Face’s config.json.

These are: