Will Transformers Revolutionize Time-Series Forecasting? Advanced Insights, Part 1

With data from industry, academia and leading researchers

At the heart of the “Generative AI revolution” is the Transformer model — introduced by Google in 2017.

But every tech revolution creates confusion. In a rapid growth environment, it’s difficult to assess innovations unbiasedly — let alone estimate their impact.

Transformers, which kickstarted this AI breakthrough, have become a “controversial model”. There are 2 extreme viewpoints:

Zealous adopters: They use Transformers everywhere, including outside of NLP. They use them even if they can’t or don’t want to — they are forced by their employers, managers, investors, etc.

Skeptics and luddites: They criticize AI models, including Transformers. They can’t understand/accept that scaling a model with more data and layers can often outperform elegant mathematical models based on rigorous proofs.

Now that the dust is settling, it’s time for impartial research.

This article focuses on Transformers for forecasting. I will discuss the latest developments from academia, industry, and leading researchers.

I will also explain how and under what circumstances Transformer-based forecasting models should be used for optimal efficiency.

I will split this analysis into 2 parts:

Background: How Transformers and Deep Learning started in forecasting.

Latest Developments: A deeper dive into recent advancements and cutting-edge time-series models. The 2nd part is here.

Let’s dive in.

Horizon AI Forecast is a reader-supported newsletter, featuring occasional bonus posts for supporters. Your support through a paid subscription would be greatly appreciated.

A Brief History of Deep Learning in Forecasting

In 2012, the AlexNet model revolutionized computer vision and marked the rise of Deep Learning. Some could say it was the year Deep Learning was born (or when neural networks were rebranded).

Initially, Deep Learning focused on natural language processing (NLP) and computer vision, leaving fields like time-series forecasting unexplored.

Two key models in NLP were Word2Vec and LSTMs with attention. LSTMs, for instance, powered Google's Neural Machine Translation (Figure 1), the backbone of Google Translate at that time:

Around the same time, researchers and enterprises started applying LSTMs for time-series forecasting. After all, text translation is a sequence-to-sequence task — and so is time-series forecasting (in multi-step prediction scenarios).

But it’s not that simple. While LSTMs made significant breakthroughs in NLP and machine translation tasks, there was hardly a revolution in time-series forecasting. Sure, LSTMs have some advantages over statistical methods (they don’t require extra preprocessing like making the sequence stationary, they allow extra future covariates, etc).

There were tasks and datasets where LSTMs were better, but there was no proof of a major paradigm shift.

This is evident in practice. In a 2018 paper by Makridakis et al., various time-series models were benchmarked. ML and DL models, including LSTMs, performed poorly, with LSTMs ranking the worst! (Figure 2)

Eventually, in the fourth iteration of the competition, known as M4, the winning solution was ES-RNN, a hybrid LSTM & Exponential Smoothing model developed by Uber Researchers.

The problem was evident: Researchers were trying to force Deep Learning methods into time-series forecasting without proper adaptation.

But time-series forecasting is an elusive task — and naively adding more layers or neurons doesn't lead to breakthroughs. A lesser-known but effective model was the Temporal Convolutional Network (TCN) — a hidden gem based on Deepmind’s Wavenet, adapting CNNs for time-series forecasting (Figure 3):

TCNs are still used today (as standalone or as parts of other models) often outperforming LSTMs in forecasting tasks — because they can be parallelized, making them faster. They are also used in financial and trading applications.

To revolutionize time-series forecasting, Deep Learning needed a unique approach. We'll explore this next.

Deep Learning Forecasting — First Sparks (2017-2019)

Remember where we previously mentioned Word2Vec? This novel method introduced the concept of creating embeddings — allowing DL models to use words as inputs from a finite vocabulary.

But Word2Vec also meant: “Hey, we can now use categorical variables in neural networks”.

Using embeddings, we could model multiple time series at once — in other words, build a global model that benefits from cross-learning.

But then researchers quickly realized it wasn’t the embeddings or deeper models that increased performance. It was the elegant architecture that leveraged statistical concepts and more data.

Two successful models from this era were DeepAR by Amazon Research and NBEATS by Elemental AI (a startup co-founded by Yoshua Bengio). Later, Google released the Temporal Fusion Transformer (TFT), a powerful model that remains SOTA today. TFT is one of the top-performing models in Nixtla’s mega-benchmark (more to that in the 2nd part).

Note: Find hands-on projects for all these models in the AI Projects folder — which is constantly updated with fresh tutorials on the latest time-series models!

The first major breakthrough was N-BEATS, which outperformed the M4 competition winner:

N-BEATS is a milestone model because:

It combines Deep Learning + Signal Processing philosophy.

It’s interpretable (trend and seasonality).

Supports transfer-learning.

Before N-BEATS, no Deep Learning forecasting model had all these capabilities. One could say that:

N-BEATS succeeded as a DL model by respecting and leveraging the statistical properties of time series in its architecture.

Similarly, DeepAR was one of the first models to integrate probabilistic forecasting into Deep Learning.

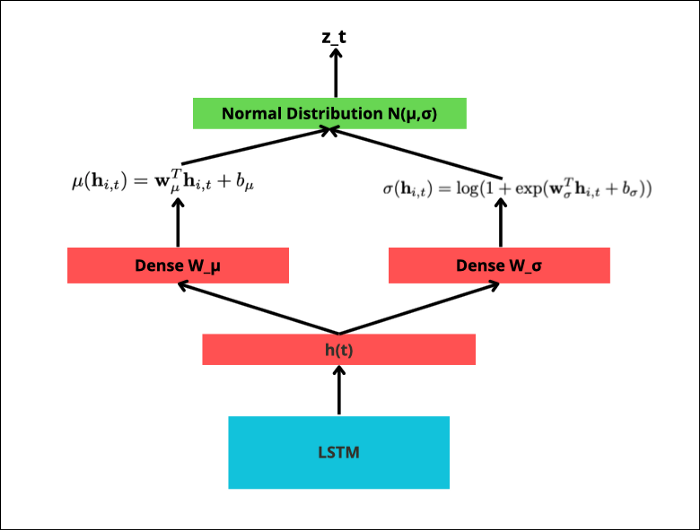

DeepAR functioned as a global model, leveraging cross-learning over multiple time series — and incorporating extra covariates. The model architecture is displayed in Figure 5:

DeepAR is essentially an autoregressive model that uses an LSTM (and some extra linear layers) to predict the parameters of a normal distribution (depending on the case) — which during inference is used to draw samples and output the forecasts.

Its success inspired variants like DeepState(a state-space model) and Deep GPVAR (which uses Gaussian copulas).

But while Deep Learning was making its baby steps in time-series forecasting, NLP experienced its revolution with the advent of Transformer.

Enter Transformers

In 2017, Google introduced the Transformer in the paper with the meme name "Attention is All You Need" — replacing its LSTM-based translation model.

The Transformer was the child of 2 models we previously mentioned: Wavenet and Stacked LSTMs with attention.

LSTMs with attention can learn longer-range dependencies — but aren't parallelizable.

Wavenet uses convolutions — which aren’t ideal for text data but are highly parallelizable.

Thus, the Transformer was created to combine the benefits of both worlds.

The Transformer was an encoder-decoder model, leveraging multi-head attention. Of course, attention(in a simpler form) was already known since [Bahdanau et al.] in neural machine translation tasks.

Next, OpenAI stole Google’s thunder by releasing GPT, the first popular variant of the Transformer model. GPT is a decoder-only model, suitable for natural language generation (NLG).

A few months later, Google released its signature model BERT, an encoder-only model. BERT was suitable for natural language understanding tasks (NLU) - such as text classification and named entity recognition (Figure 6). Hence: